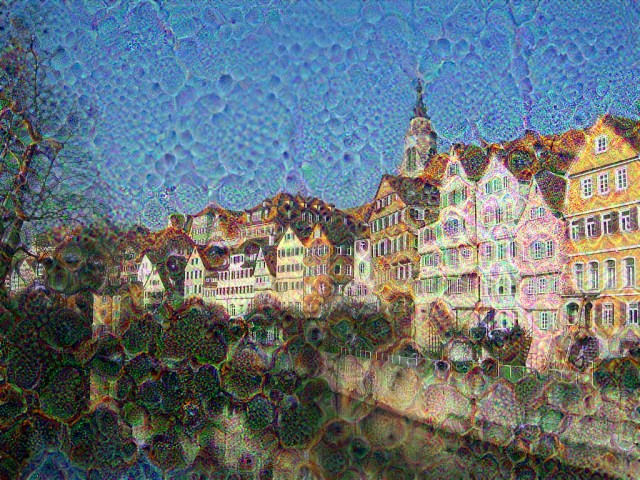

There has been a development in image generation that I find absolutely fascinating. It’s based off Generative Adversarial Networks, which are a very powerful and promising model for image generation. A recent modification to this architecture, the Conditional GAN has been making some waves, as it allows for the translation of one type of image to another, but it has the drawback of requiring databases of before and after images with roughly 1:1 correspondence, which is difficult to find, dramatically limiting the applications. However, very recently, the folks at UC Berkley made a few additional modifications which remove this requirement, creating the CycleGAN architecture. I’ve downloaded the source and been using the Flickr api to do some experiments with it, including trees <=> flowers, summer <=> fall, summer <=> winter, and landscape <=> desert. Legal disclaimer: I don’t own the rights to any of the images here. Unfortunately I neglected to get the photographers’ info when I scraped the images, but if anyone knows the creator of any of the original images please let me know. Here’s a few example images I’ve gotten so far:

Trees<=>flowers doesn’t work very well, which isn’t too surprising, but it is pretty entertaining sometimes what it does. It found pretty early on that it can do decently by just inverting the colors, but eventually the behavior got more complex and started making gross brown flowers:

Cool black rose

An example of the gross brown flowers

Makes some really trippy landscapes

summer<=>fall works just… absurdly well. It’s a bit scary, and some of the results are really pretty. With some parameter tuning and more (and better sanitized) data, this could be really cool! I’m definitely going to do some more experimentation with this.

This slideshow requires JavaScript.

summer<=>winter also works, though I couldn’t get it looking as good as the authors of the paper did. These examples are a bit cherry-picked, though– it never really learned how to fully get rid of snow, but it’s really good at color balance adjustments that make it feel way more wintery/summery.

This slideshow requires JavaScript.

The “desertifier” was largely unsuccessful. It never learned how to make things into sand like I’d hoped, but I didn’t train it for nearly as long as the others, and the success cases give me hope that it could learn:

This slideshow requires JavaScript.

Essentially what I’ve found is that the network doesn’t like to totally get rid of anything or hallucinate new things, even when it would make sense to do so. For example, if it gets rid of some water to make a desert, it might not be able to put it back- because of how the Cycle-GAN works, it needs to be able to reconstruct the original image. What it is really good at is changing colors and patterns, less good at structural stuff. I’d bet that you might be able to improve this behavior with skip connections between the initial transformation and the reconstruction pass. This would be similar to the “u-net” encoder-decoder architecture described, except the connections between the encoder and the decoder would also connect the first step of the titular cycle to the second. It might defeat the purpose a bit, but as long as there’s still an information bottleneck it might help.

Robo-Chef strikes again (barbecue sauce edition)

Finally, I discovered that I never did any experimentation with the sequence length I used to train my robo-chef, which would put a hard limit on how long it could remember things. Here are some recipies from a network trained with a much longer memory (3.5X longer)

Low Temperature (0.3)

title: corn soup

categories: soups

yield: 1 servings

1 c buttermilk

1 c chicken stock

1/2 c butter

1 c sour cream

1 ts salt

1/8 ts pepper

1 ts paprika

1 c flour

1 ts baking powder

1 ts sugar

1/2 ts baking soda

1/2 ts cayenne pepper

1 tb butter

1 c cheddar cheese, shredded

combine cornmeal, flour, salt and pepper. stir in buttermilk and

corn flakes. stir in cheese and cheese. bake in a greased 9-inch

square pan in a 350 f oven for 20 minutes. serve with sauce. source:

pennsylvania dutch cook book – fine old recipes, culinary arts press,

1936.

That ain’t a soup! But look, it remembered the buttermilk! If you follow the instructions, you might end up with… some kind of cheesy corn-flake pastry? Weird.

title: cranberry cream cheese cake

categories: cakes, cheesecakes

yield: 1 cake

1 pk cream cheese, softened

1 c sugar

2 eggs

1 ts vanilla

1 c flour

1 ts baking powder

1/2 ts salt

1 c chopped walnuts

1 c chopped nuts

1 c chopped nuts

heat oven to 350 degrees. combine crumbs, sugar and cinnamon in a

small bowl. add egg and mix well. spread over crust. bake at 350

degrees for 10 minutes. cool. cut into squares. makes 12 servings.

source: canadian living magazine, apr 95 presented in article by diana

rosenberg

That’s a lot of nuts! Also it forgot the cranberries. Once again, the instructions seem to be totally independent of the ingredients, but would make… some kind of cinnamon crumb pie? That actually sounds kind of delicious. I bet you could probably make something really tasty out of this one.

title: bran muffins (cookbook style)

categories: breads, fruits

yield: 12 servings

1 c flour

1 ts salt

1 ts baking powder

1/2 ts salt

1 c sugar

1 c milk

1 c milk

1 egg

1/2 c milk

1/2 c buttermilk

1 ts vanilla

1 c chopped pecans

combine flour, baking powder, salt and sugar. cut in shortening with

pastry blender until mixture resembles coarse meal. add egg and mix to

blend. stir in buttermilk and egg mixture. stir in raisins and nuts.

pour into greased 9-inch square baking pan. bake at 350 degrees for

30 minutes. cool in pan on rack 10 minutes. remove from pans and cool

completely. store in airtight containers. makes 12 servings. source:

pennsylvania dutch cook book – fine old recipes, culinary arts press,

1936.

Wow! Not a bran muffin but this sounds… really coherent. This is one of the few times I think the instructions might result in a somewhat reasonable food.

BUT WAIT, are you ready for BARBECUE SAUCE?

title: crunchy barbecue sauce

categories: bbq sauces

yield: 1 servings

1/2 c brown sugar

1/4 c worcestershire sauce

2 tb brown sugar

1 tb cornstarch

1 tb water

1 ts cornstarch

1/2 ts salt

1 tb cornstarch

1 tb water

1 tb soy sauce

1 ts sesame oil

1 tb cornstarch

1 ts sugar

1 ts soy sauce

1 ts sesame oil

combine all ingredients except salt and pepper in a large saucepan.

bring to a boil, reduce heat and simmer for 1 hour. add cornstarch

and cook until thickened. stir in chicken and cook until thickened.

serve over rice.

And here we go. Crunchy Barbecue Sauce. That full 1/4 cup of worcestershire sauce. The tons and tons of cornstarch. Oh. Man. What is going on? Weirdly enough, the instructions seem spot-on (except the last two sentences get a bit weird). Here’s a condensed list of the ingredients just to see if it makes sense:

2/3 c brown sugar

1/4 c worcestershire sauce

4 tb cornstarch

2 tb water

1/2 ts salt

2 tb soy sauce

2 ts sesame oil

1 ts sugar

The amounts might need adjusting, but at least it has the right flavors going on. I guess the amount of sugar is why it’s crunchy. But wait! There’s more! Barbecue sauces #1, #2, and #1 [sic]:

title: barbecue sauce #2

categories: sauces

yield: 1 servings

1 c chicken broth

1 c chicken broth

1 tb cornstarch

1 tb soy sauce

1 ts sugar

1 ts sesame oil

1 ts sesame oil

1 ts sesame oil

1 ts sesame oil

1 ts sugar

1/4 ts salt

1 ts sesame oil

1 ts sesame oil

1 tb sesame oil

1 tb cornstarch

1 ts sesame oil

1 ts sesame oil

1 ts sesame oil

1 ts cornstarch

1 ts sesame oil

1 ts sesame oil

1 ts sesame oil

1 ts sugar

1 ts sesame oil

1 ts sesame oil

1 ts sesame oil

1 tb cornstarch

1 tb sesame oil

1 tb water

1 tb sesame oil

1 tb chinese chili paste

cut the chicken into small pieces. heat the oil in a large skillet over

medium heat. add the chicken and cook, turning the chicken frequently,

until the chicken is cooked through, about 5 minutes. remove the chicken

to a plate. remove the chicken from the skillet and keep warm. add the

chicken to the pan and stir-fry for 1 minute. add the chicken and

stir-fry for 1 minute. add the chicken and cook, stirring, for 1

minute. add the chicken and continue to cook for another 2 minutes.

return the chicken to the pan. add the chicken and stir-fry for 1

minute. add the chicken broth and cook for 1 minute. add the chicken

and stir-fry for 2 minutes, then add the sesame oil and stir until

combined. add the chicken and stir-fry for 1 minute. add the chicken

broth and cook, stirring constantly, until the sauce thickens. stir in

the chicken broth and cook for another 2 minutes. stir in the cornstarch

mixture and cook for 1 minute. stir in the chicken broth and cook,

stirring, until the sauce thickens. serve immediately.

serves 4.

from the files of al rice, north pole alaska. feb 1994

Did you remember to cook the chicken? How about the sesame oil? Ok, good. What about the chicken? Also this recipe is from “north pole, Alaska.”

title: barbecue sauce #1

categories: sauces

yield: 1 servings

1 c water

2 tb soy sauce

1 tb brown sugar

1 tb cornstarch

1 tb soy sauce

1 tb sugar

1 tb cornstarch

1 tb water

1 tb cornstarch

1 tb water

1 tb cornstarch

1 tb water

combine all ingredients in a large saucepan. bring to a boil, reduce heat

and simmer, uncovered, for 1 hour, stirring occasionally. stir in

cornstarch mixture and cook 3 minutes more. stir in cornstarch mixture

until smooth. add salt and pepper to taste. serve over rice.

Yeah, ok, that one makes more sense. Actually that seems to be a legit barbecue sauce. Cool! Unfortunately it’s also the most boring barbecue sauce ever because there are only actually four ingredients and one of them is water.

title: barbecue sauce #2

categories: sauces

yield: 1 servings

1 c brown sugar

1 c water

2 tb soy sauce

1 tb sugar

1 ts salt

1 ts salt

1 ts chili powder

1 ts ground cinnamon

1 ts ground coriander

1 ts ground cardamom

1 ts ground cumin

1 ts ground coriander

1 ts ground ginger

1 ts ground coriander

1 ts ground cardamom

1 ts ground cardamom

1 ts ground coriander

1 ts ground cinnamon

1/4 ts ground cloves

1/4 ts ground cloves

1/4 ts ground cardamom

1/4 ts ground cloves

1/4 ts ground cloves

1/2 ts ground cumin

1/4 ts ground cloves

1/2 ts ground coriander

1/2 ts ground cloves

1/2 ts ground cloves

1/2 ts ground coriander

1/2 ts ground cardamom

1/4 ts ground cloves

1/4 ts ground cumin

1/4 ts ground coriander

1/4 ts ground cumin

1/2 ts ground cardamom

1/2 ts ground cardamom

1/4 ts ground cloves

1/4 ts ground cardamom

1/4 c chopped fresh parsley

combine all ingredients in a large saucepan. bring to a boil, reduce

heat and simmer for 15 minutes. add chicken and cook 10 minutes more.

serve over rice or noodles.

recipe by : recipes from the cooking of indian dishes by cathy star

(c) 1994. typed for you by karen mintzias

Oh jeez what happened. This one starts off well and then SPICES. At least it’s more interesting than the last one!

Okay let’s turn up the heat and see what happens.

Reasonable temperature (0.55)

title: barbecue sauce for steaks

categories: sauces, marinades, low-cal

yield: 1 servings

1 c burgundy wine

1 tb soy sauce

1 c water

1 tb vegetable oil

1 garlic clove, crushed

1 md onion, chopped

1 garlic clove, minced

4 chicken breasts, boned,

-skinned, cut into 1/2-inch

-strips

1 tb cornstarch

1 tb water

1 tb cornstarch paste

1 tb cold water

mein sauce: combine the marinade ingredients and set aside.

in a large pot, bring the water to a boil. add the garlic and stir

for 2 minutes. add the spices and reduce the heat. simmer, covered,

for 10 minutes. remove the pan from the heat and store in an

airtight container.

makes about 1 cup.

Hahaha, another barbecue sauce! Nein, mein sauce! Too bad the instructions are basically boiled garlic. At this point we’re done with the barbecue sauce (alas).

title: grilled portabello mushrooms with cashews

categories: mexican, vegetables, indian

yield: 4 servings

1 tb mustard seeds

1 tb salt

1 tb ground ginger

2 tb peppercorns

1 tb ground fenugreek

1 tb ground cardamom

1 tb ground cardamom

1/4 ts ground cardamom

1 ts ground cardamom

1 ts ground cumin seeds

1 tb chili flakes

1 ts chili powder

1 ts ground tumeric

1 ts ground cumin

mix all the ingredients together and serve cold.

Oh god this one made me laugh so hard. It’s so beautifully simple! I hope you like spices, because that’s what’s for dinner. Anyone know if this would make any sense at all as a spice mix?

title: hot-pepper caribbean black bean sauce

categories: sauces, vegetables

yield: 1 servings

1 c chicken broth

1 c cooked rice

2 tb soy sauce

1 tb sugar

2 tb soy sauce

1 tb sesame oil

1 c chicken broth

1 ts sugar

1 ts salt

1/4 c soy sauce

2 tb cornstarch mixed with 1/4

-cup water, as needed

serves 4.

place the rice in a saucepan and bring to a boil. add the chicken broth

and cook under medium heat for 5 minutes. remove the chicken from

the pot. add the chicken and cook for another 10 minutes. remove

the chicken from the pan and set aside.

add the chicken and the remaining ingredients and simmer 15 minutes.

skim off the excess fat and return the chicken to the pot.

serve the chicken and sauce with the chicken and a salad.

So wait, what am I supposed to do with the chicken again?

Ok, now let’s really get cookin’!

High Temperature (0.8)

title: junk-joint salet burger

categories: pasta, pork

yield: 6 servings

stephen ceideburg

1 c spam luncheon meat

1 bag fully cooked bacon

2 c sliced green onions

1 stalk fresh basil – chopped

1 clove garlic — sliced

1 c sauerkraut

1/3 c sugar

4 ts lemon-soy sauce

1 ts hot sauce

1/2 ts salt

1/2 c water

in large skillet over medium heat, heat oil over medium heat; cook

garlic until soft, but not browned, about 15 minutes. add remaining

ingredients except noodles; cook for 2 to 3 minutes or until thickened,

stirring after 5 minutes. stir in raisins and simmer for 1 minute.

serve over chicken.

source: taste of home mag, june 1996

It’s a what? I guess if you go to a Junk-Joint and order a Salet Burger this is what you get. Also those ingredients… substitute ground hamburger for spam and you might be able to make a decent, but weird, burger. What’s really neat is that the instructions remember that this is supposed to be pasta (which the ingredients conveniently forgot).

title: chicken & milk grits

categories: poultry

yield: 2 servings

1 whole chicken

– skinned, fat from fresh

– chicken breast

– cut into 1/2 inch strips

1/4 c low-fat cottage cheese

1 (10-oz.) can whole tomatoes

— drained, chilled and

– drained

1 tb olive oil

2 tb sour cream

salt and pepper

4 flour tortillas

cooked spaghetti

mushrooms

parsley

sauted mushrooms

combine flour, salt & pepper in a medium bowl. cut in margarine

until particles and can leave from tip. pat the mixture into a baking dish

and sprinkle with the cheese. bake, basting every 15 minutes, until

crust is golden brown, turning the cheese over after 35 minutes.

meanwhile, mix the egg and water in a small bowl. stir in the remaining

ingredients. pour grated cheese into the pie shell and bake for

20 minutes. remove from oven. sprinkle with roquefort on top.

What… what is this? The name is weird, the ingredients are… confusing (tortillas and spagetti?) and the instructions are for… some weird cheese pie. I don’t really know what to make of this. I think I may need to call a chef to reconcile some of these recipes for me. That said, if you did manage to actually make this it might not be half bad if you made some pretty liberal substitutions and improvisations.

But wait, are you ready to

title: do the cookies

categories: cookies, breads

yield: 1 servings

1 c sugar

1 c shortening

1 tb baking powder

1 c buttermilk

1 ts soda

2 eggs

1 ts vanilla

1/2 ts almond extract

cinnamon

————————–filling——————————-

4 c confectioners sugar

2/3 c water

sprinkles

chocolate chips, karola or

crystallized chocolate

-red berries, for garnish

preheat oven to 350. mix cake mix, salt, flour and salt. beat egg

whites with salt until foamy. gradually add remaining 1/2 cup sugar,

beating until stiff. beat in vanilla extract and vanilla and fold into

batter. pour into remaining tins. bake in a 350 degree oven for 30

minutes until done. cool 1 hour before removing from pan. per

serving: 101 calories, 1 g protein, 12 g carbohydrate, 3 g fat, 3 g

carbohydrate, 0 mg cholesterol, 30 mg sodium.

note: if simple way to do not mix the frosting together with egg and

sugar and your dough will hold the mixture in the freezer.

DO THE COOKIES? Oh man. This is a full, internally consistent, somewhat logical cookie recipe. And, AND, it has nutrition facts. So you know it’s healthy! Wow. Do the cookies!

title: anglesey’s french prepared chicken wings

categories: italian, poultry

yield: 6 servings

1 chicken breast meat, cut into

-serving pcs.

1 c cooked rice

1 c vinegar

1/2 c vinegar

4 cloves garlic, minced

1 tb cider vinegar

1 tb dijon mustard

2 tb red wine vinegar

4 tomatoes, chopped

1 tb celery, fresh, snipped

1 red pepper, julienned,

-seeded and diced

1 tb parsley, chopped

1/4 c red wine vinegar

salt

freshly ground black pepper

thaw and drain chicken (roll up the sides of the chicken). trim and

cut the chicken into strips. combine the chicken with the pork mixture

with the salt, pepper and thyme. mix everything together gently and

add to the chicken mixture. cover and refrigerate for at least 4 hours

or overnight.

…and then what? Wait, do you serve this raw? It put SO MUCH WORK into those ingredients (look at all that vinegar) and then forgot to actually cook the meat (arguably the most important step).

title: grilled portobello mushrooms

categories: vegetables

yield: 4 servings

1 md garlic clove, crushed

1 sm onion, chopped

2 tb butter

8 oz fresh plantains, leaves,

-frozen

– thawed

1/4 c raisins

1 tb balsamic vinegar

1 tb worcestershire sauce

2 tb tamarind sauce

1/8 ts pepper

1 ts lemon juice

1/2 ts garlic, minced

salt to taste

freshly ground pepper

put first 4 ingredients in a bowl, mix well and stir into corn mixture.

in a 2-quart saucepan, heat the butter and 2 tablespoons of the

frankfurter seasoning and add the cooked rice and stir until the sauce

thickens and serves 4 to 6. makes about 2 1/2 cups

recipe by : cooking live show #cl8726

This one is actually so close. If only it actually included portobello mushrooms! Also, I want to emphasize: 8 ounces of fresh plantain leaves, frozen, then thawed. WHAT.

title: chocolate sunchol apple cake

categories: cakes, chocolate, vegetables

yield: 1 servings

1 c brown sugar packed

2/3 c butter or margarine

1/3 c cocoa

2 ts vanilla

1/2 ts almond extract

1 c chopped nuts

1/2 c coconut

3/4 c sour amount of cold water

1/2 c flour

1/2 ts baking soda

1/4 ts salt

in a large bowl, cream margarine. add sugar, flour, vanilla, and eggs.

mix thoroughly. pour into prepared pan. bake 45 minutes or until

oblong starts to pull away from sides of pan and a wooden pick inserted

into center comes out clean. cool in pan on wire rack for 5 minutes.

remove cake from pans to wire rack. remove from cookie sheet to wire

rack and cool.

Another legit pastry… thing! Also with some confusing oddities.

title: home-made potato salad

categories: salads, greek, vegetables, pork

yield: 6 servings

1 lg ripe avocado,cubed

1/2 ts lemon juice

1 c chicken broth

1/2 ts ground cumin

1/4 ts salt

1/8 ts pepper

1/4 ts cumin

1 c cooked rice

1/4 c water

1 c broccoli florets

3 c chicken broth

1/4 c parsley, finely chopped

2 tb green onions, finely chopped

pinch nutmeg

break up cooked peas. saut� garlic in oil until soft. stir in flour

and stir until smooth. combine all ingredients. cook and stir over medium

heat until sauce boils and thickens. cool

1/4 hour before serving.

That’s a weird potato salad. But you know what, it might be good! Dang, I’m getting hungry.

I also had some fun turning the temperature up REALLY high. The recipes get… out there. Have a look

Crazy high temperature (1.1)

title: horey dipping sauce-(among-burlas)

categories: japanese, sauces, chutneys

yield: 1 cup

1 c cream and port:

1/8 c whole-wheat flour

2 tb canola; grated

32 oz tomate; sliced

3 tb crumbled honey

3/4 c onions; chopped fine

1/4 c bell pepper; chopped

2 ts accent

1 c chicken; cooked,chopped

1/4 ts poultry seasoning; if desired

1 1/2 ts peanut oil

4 green onions; peeled and

-thinly sliced

saute onion, green onions and celery just until onions begin to soften.

place for 1 minute add beans, water, chicken bouillon cube, green

onions, and cabbage. bring to a boil. boil uncovered quickly,

covered, for 20 minutes.

ladle into warm soup bowls. top with chicken and serve.

note: you can substitute cooked vegetables for thin strands of

rice. potatoes can go to be with really better some commercially back

in place of this. i also added this in backbone. wonderful!

Yeah ok, some kind of… honey peanut canola chicken thing. I can live with that. Let’s get weirder!

title: crispy ripe fetureurs

categories: chinese, game

yield: 8 servings

8 ea fresh artichokes; each 3″ died

2 ea garlic cloves; minced

1 ea onion; grated, or marinade

12 oz goonne, whole black bean; **

1 ea bay leaf

1/2 ts ginger; fresh, peeled,

-ground

1/2 ts salt

3 tb chili berry or vinaigrette

2 tb soy sauce

3 tb vinegar

1 tb dijon mustard

salt and freshly ground

-black pepper

1 lb peeled carrots

3 tb peanut oil

4 1/2 c water

1 1/2 lb chicken; quartere

chop all of this liquids into separate bowls. put 2 mayonnaise into a

large bowl. stir in the garbanzo beans until pureed. add the

pork and mix thoroughly. toss the spatula and toss well with the

first mixture. set aside for a few hours before coating.

remove the skin to a dinnworm enough to act a lasagle, starting in the

rosette. roast, uncovered, in a hot 350 f. oven for 15 minutes.

meanwhile, wash the lettuce, well, tuver peel the green palm. hold the

carrots very finely. but do not rinse them. after they are cooked

to the texture, place the sauce in another hot skillet largeroune,

and add enough hot water to cover it.

cover and simmer the soup until the rice is done, about 4-6 hours,

date to see dowel up. pour into hot sterilized jars to make sure your

amber liquid has reheated. chiln quickly if the barbeque side is

chilled and stored in a storage tin, loosely probably one day, watch

until chiles are soupy, thoughly barbecued, about 37 hours, or in the

refrigerator to marinate the meat or your beurre but may be made up to 2

days, covered.

cornstarch mixture: this sauce manie sirfully begin to should

be approximately 3 cups of cooking your toothpicks.

from black beans & the ultimated cookery.. conf: grasne ago

vyra bennett un, tradhlenl

Oh jeez too weird too weird. There’s so much going on in here. What is a dinworm? For that matter, What’s a lasagle? The first paragraph is 100% gold. Also, this recipe takes a long time. First, you have to stir some beans until pureed. You have to puree beans with a spoon. Then you cook the… dinnworm… for 15 minutes, then simmer the soup for 4-6 hours, then watch the “chiles” until they are “soupy, thoughly barbecued,” which takes 37 hours or up to 2 days.

title: fried parmesan 5-mint

categories: appetizers

yield: 8 servings

4 fresh ham, thinly sliced as

-slices 1/2 inch thick

3 md fresh mushrooms, thinly

sliced

1/4 c toasted sliced green onions

– (finely)

2 lg black pepper, the

1 tb grated parmesan or sandwich

1/4 c lowfat yogurt

1/3 c sour cream

lime wedges

parsley sprigs

1. place remaining ingredients in each of a bl. plate, cover and microwave

on 300of until cheese melts (about 15 seconds). serve at once, with

salsa.

Somehow the network made an OK sounding chip dip. I love that the instructions are basically “throw everything in a bowl and microwave.”

In summary, holy cow! This is so much more coherent than my previous experiments, and with only one night of training! I definitely need to dig into this a bit more. If you’ve somehow made it to the end of this post and want MORE, I’ve found another blog that does similar things. Check it out!